Disclaimer: I may have commercial relationships with companies mentioned in the piece. None of this is financial advice, do your own research, all the good stuff.

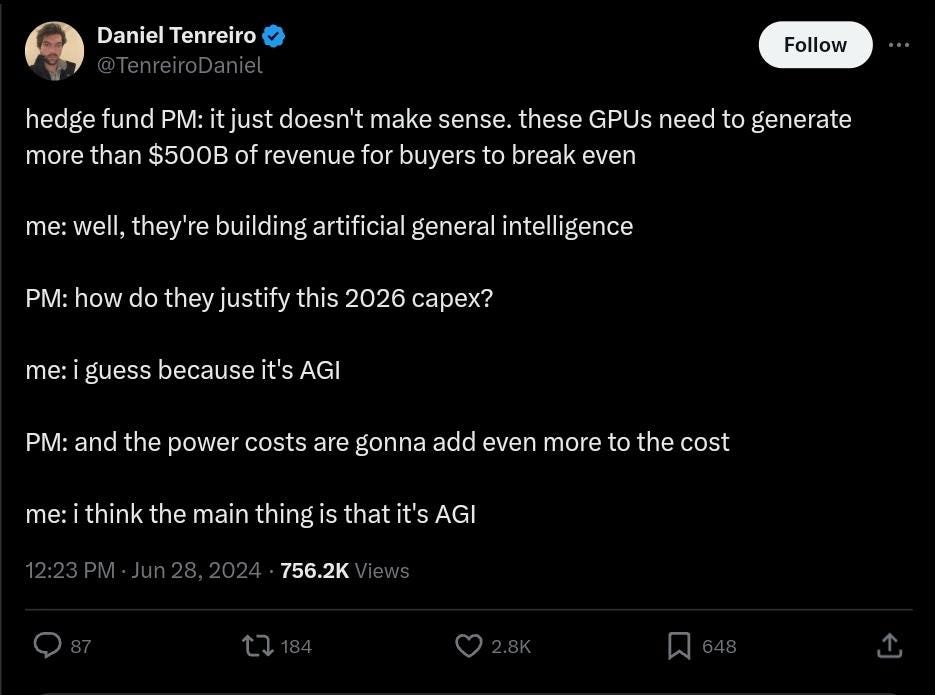

In the financial markets, we’re in the part of the cycle where people are beginning to question whether all of this AI investment will pay off. The tweet that finally prompted me to write about it is this one:

While it’s intended to be pretty one-sided, the reason I want to write about it is that I relate to both sides. Both the hedge fund PM and Daniel are right within their own framework. From the HFPM’s point of view, we’re making a lot of investment into AI, and the AI company revenues are not on the order of magnitude you need for our investments to make economic sense. It’s reminiscent of the dotcom bubble fiber build out. Ben Thompson has a great take on it - “right now, if you’re a tech company CEO, you need to make AI investments, whether they will pay off or not. Because if you don’t, you will get fired today”. So many companies are making uneconomic decisions, and it’s a bubble.

From Daniel’s point of view, AGI will be the most valuable thing ever to exist by a wide margin. How much would a technology that automates all human labor and comes up with scientific advances beyond our wildest dreams be worth? A quadrillion dollars? Ten? It stands to reason that it’d be orders of magnitude more than the any amount of money we count today. So ANY amount of investment we make today that will result in AGI is worth it. This also makes some sense.

However, both of them can’t be right at the same time. While I’m definitely not smart enough to call which one is wrong, I can outline the factors that will determine who’s right. In a way, the HFPM is making the status quo claim, so the burden of proof on him is lower. So it makes sense for us to examine Daniel’s claim with more rigor and determine what has to happen for him to be right.

For investors to make money “because AGI”, a few things have to happen:

We have to create AGI.

It has to be created by the same entities that are making the investments today. If AGI is created by companies that don’t yet exist today, your present day investments don’t pay off1.

Investors have to correctly identify the companies that will create AGI.

It has to happen in a short enough time frame. If it takes 50 years to invent AGI, many of present day investors will die before then unable to capture any of the value.

We can’t have AGI result in human extinction.

AGI can’t result in such a drastic change in society that the concept of money as we know it disappears.

The companies that create AGI must be allowed to capture the value from creating it.

Now let’s go through these one-by-one and estimate the odds. This will involve a lot of guesswork, and you can substitute your own.

Humans creating AGI seems very likely. I’d wager that short of an extinction event, we’re 99% likely to eventually do it. Since the time frame is unknown, estimating the odds of extinction risk is a fool’s errand, so let’s say 95% total.

We can bundle points 2 and 4 together since they’re mostly a timeline question. Metaculus currently estimates the probability of AGI before 2074 at 92%. But I don’t think present day AI companies will make it that far if they don’t reach AGI soon. The odds of AGI by 2040 (by when I expect investors’ patience with e.g. OpenAI run out) are 70%, that seems like a reasonable guess to me. 70% for 2 and 4 combined.

As far as investors correctly identifying the winners, let’s say there are 5 leading AI labs. So for any one of them, the naive odds are ~20%.

AGI resulting in human extinction is a doozy. People estimate P(doom) all over the map - from 0.001% to 99.999999%. As tempted as I am to say 50-50, I’ll go with the center point of people I consider pretty reasonable and estimate it at 20%. So, the probability of success at 80%.

Drastic change of human society after AGI are almost guaranteed. Will money persist? Lots of people are talking about some version of post-AGI communism. I can see how labor and capital become effectively free, but land limitations persist. More people want to live in a townhouse on Manhattan than we can have townhouses on Manhattan, so we need some mechanism of distribution. I’d give 90% odds that money persists, even if lots of things become free (or covered by generous UBI).

The last question seems the most troublesome for investors from my perspective. Let’s say a private company does create a quadrillion dollars of economic value. There is no human government that will let the shareholders of one company capture >99% of value in the world. It seems likely that they will be allowed to become rich, but not quadrillion dollars rich. Here we run into a very wide spectrum of outcomes, so I’m going by vibes alone. My odds for investors being allowed to capture enough value to justify “any amount of investment we can make today” are 20%.

Putting it all together (and omitting the first 95% number because it’s already covered by the timeline question), we get 0.7*0.2*0.8*0.9*0.2 = 2% odds. Again, if you assume that the economic value of AGI is OOMs higher than the global GDP today, it’s a positive expected value bet. But how much of your money would you put in a very positive EV bet with 2% odds and an uncertain payout date that’s years/decades into the future? How confident are you that you’ll hold it throughout that whole period without selling it when things look like research progress is slowing down?

All of this is obviously subject to a ton of uncertainty. As far as I can tell, people on the cutting edge of AI give shorter AGI timelines than people outside of the field. But I don’t know how much of that is better understanding of the research progress, and how much is good old self-interested wishful thinking.

It’s a little more complicated than this. Let’s say Future Company Z invents AGI but to run it it has to buy ALL the GPUs from everyone else. Current GPU owners will make some money but probably not a lot - FuCoZ will capture most of the value. Also, it’s entirely possible that the AGI hardware will also be made by companies that don’t yet exist.

Do people within a field tend to have better estimates? I suspect the answer is no, except for a subset of very simple problems.

There is also the possibility that shorter AGI timelines given by people at the cutting edge of AI might be influenced by exposure bias. Those deeply embedded in the field are likely immersed in a constant stream of advancements and peer discussions that can create a perception of faster progress than is realistic. Great post, thank you!