What is to be done?

But actually what comes before "What is to be done?"

There is an old saying that there are two fundamental questions of Russian reality: What is to be done? and Who is to blame1? The two questions are the titles of two novels by Chernyshevsky and Herzen, with What is to be done? further immortalized by Lenin who later on published an essay with the same title.

Assigning blame can wait until there’s nothing whatsoever to be done, so let’s focus on the first question. This essay is my attempt to answer it. I do not claim that there is any original thinking, but I don’t think that any is required from a statement of values. While coming up with a novel scientific idea is like blazing a trail, stating values is more like etching something into stone. You benefit from repetition, which enables ever-increasing precision.

What is to be done? My sense is that we can’t find a final answer to this question until we understand what’s going on. We have made some solid advances in understanding the mechanics of what’s going on (Electromagnetism! General relativity! Genetic code!) but we haven’t really made any progress on what I consider to be the three fundamental problems of our reality2:

Why is there something instead of nothing?

Can we reverse entropy?

The hard problem of consciousness.

Some minor progress has been made on question number 2 in that we can phrase it better, old formulations of the question necessarily involved all kinds of deities. And it seems like we’re getting our scientific cannons in place to begin the Siege of Constantinopleciousness3, but overall we have made very limited progress on these fundamental problems.

In effect, I choose to punt on What is to be done? in favor of What the hell is going on here, anyway? The way I see it, we woke up in a dark room and we’ve been able to light up some of it, and, while, sure, we can focus on fighting over what wallpaper we use, I’d much rather explore the rest of it, and even try to see what’s outside4.

What comes next is a bit of a leap, but I don’t think it’s a crazy one to make. I think that the best thing we can do right now to improve our odds of figuring out what’s going on is increasing the level of intelligence available. Humans have about three times as many neurons as gorillas, and the jump in the level of understanding the Universe between the two species is remarkable5. It seems quite reasonable to assume that an order of magnitude jump in the number of neurons would propel us to a similarly great ascent in our understanding of Life, the Universe and Everything.

In a way, I already see us running up against our cognitive limitations. The image I have in my mind for our cognitive limitations is this map of metabolic pathways:

While, sure, we understand this map in that we can zoom in on any small bit of it and describe the specific chemical reactions there, I don’t think it’s possible for a human to fully load the whole system into their brain and be able to mentally tinker with it and perfectly predict the results of some changes we might want to introduce. So we have to resort to brute force (huge numbers of automated experiments). It’s a fine approach in that it gets us results, but it’s clearly not as good as understanding the system. And I see no reason why somebody with more mental capacity couldn’t just load everything that’s happening there into their brain and understand biological systems perfectly.

In the question of how to increase intelligence, I am means-agnostic. Cloning John von Neumann, embryo selection, genetic engineering, brain-computer interfaces, artificial intelligence. It’s all good, the more the merrier, whatever works. However, most people are against ALL of these things, so it’s worth spending some time on this.

There are broadly two classes of arguments that people make against increasing intelligence. The first one is that humanity is special and that it would be bad if humans were replaced by somebody else. This isn’t the kind of statement one can refute, however, this is definitely a statement I can dance with.

Speaker for the Dead is Orson Scott Card’s best book6. In it, he introduced the Hierarchy of Foreignness. It delineates various degrees of alienness:

This model is an interesting introduction to alienness, but it does fail pretty trivially - it makes no allowance for sentient beings from your own planet. We currently believe that many animals are sentient. And there is more to alienness between humans than just their city. A member of your own subculture from halfway around the world may well be less alien to you than your next-door neighbor.

Moreover, as Michael Levin wisely points out, even the distinction between “life” and “robot” is not going to be useful to us as we master engineering biology. Already, there are things that are hard to place in a specific category. Take, for example, biotherapeutics by Synlogic. They are cells that you take as a pill that will exist (live?) in your body, but they won’t procreate and will just help you digest certain things that you might not be able to digest yourself. Are they robots? Are they alive? Michael Levin says that these aren’t useful questions, it’s more important to focus on what can these things actually do?

Similarly, when thinking about alienness, I don’t think that discrete categories are the way to go. It’s better to think of alienness as a spectrum. The toy model I use for alienness is called CAKe. It ranks others on:

Communicability. How well can I communicate with the other?

Agency. How much agency do I believe the other to have?

Kinship. How much kinship do I feel with the other? This is largely broken down into a set of shared experiences and a set of common ideals, or kinship of the past and kinship of the future.

The e in CAKe stands for exponential, it merely denotes that the 0-9 scale used for measuring C, A, and K is a logarithmic one.

So, for example, I might rank my brother C:8, A:9, K:9. I wouldn’t rank any human as higher than 8 on Communication as I reserve 9 for people with whom I might get connected through high-bandwidth BCIs, as I imagine that that will greatly improve communication bandwidth compared to language7. Let’s look at some more subjective rankings:

Random American: C:6, A:9, K:6.

Random human: C:5, A:9, K:5.

My cat: C:3, A:7, K:8.

Random cat: C:3, A:7, K:4.

Palm tree: C:1, A:2, K:2.

Photon: C:0, A:0, K:0.

GPT-3: C:6, A:0, K:5.

OpenAI Five: C:3, A:2, K:2.

Francis Crick circa 2022: C:5, A:0, K:8.

Myth of Prometheus: C:5, A:2, K:8.

Note that there is no threshold above which only humans can rank. Already, some non-humans beat some humans in kinship for me, and I would also say that Large Language Models beat humans with whom I don’t share a common language in communicability.

Now, let’s say that we invent an AI that satisfies the following conditions:

It has access to all of my memories.

It has as much agency as a human.

It can communicate with me perfectly (not just words, but images directly into my brain).

We share a set of ideals.

That AI would rank higher in my CAKe than any human. So, summing up this section, I think that there can easily be nonhumans that are less alien to me than humans, so I don’t subscribe to the humans-are-kin-and-everybody-else-isn’t argument.

There is no “Human Future”. While humans may continue to exist for a long, long time, it is silly to think that evolution just stops with us. The reason that all of human writing focuses so much on humans is that we haven’t existed for long enough to observe evolution in action. Let’s say humans are 300,000 years old, mammals are 178 million years old, and multicellular life is 600 million years old. Now try to think one billion dollars years ahead. The idea that the pinnacle of evolution at that point is still humans is laughable.

That doesn’t mean that humans will go extinct! Sharks are 450 million years old, and they’re still around. But the exciting developments in life have now for a while happened away from sharks. Sharks are just cruisin’.

In addition to vanilla evolution, we have acquired tools like synthetic biology, BCIs, and AI. Any of the three can produce the next chapter in the grand history book much, much faster than evolution. Subjectively, I think that it would be REALLY COOL to get to see what the next chapter is, but long-term, it doesn’t matter whether we try to accelerate change or not - there is no preserving the status quo for a billion years, that’s simply not possible.

A couple of billion years ago, cyanobacteria were hot shit. They released lots of oxygen into the atmosphere. This Great Oxygenation Event killed off a ton of life (it caused a full-on extinction event!) since oxygen is highly reactive, but it also created the conditions for our survival.

A few hundred million years ago, millipedes went where no animals have gone before - dry land. A small step for what we now consider a pest, a giant step for Earth life in general.

A few thousand years ago, some Sumerian homies started writing down their communications, supercharging the process of civilization.

A few hundred years ago, Sir Isaac Newton formulated the laws of motion, making one of the greatest steps in increasing our understanding of the Universe.

A few years/decades from now an AI model will make an equal leap in our understanding of the Universe.

A few decades/centuries from now, a von Neumann probe will colonize a new star system.

A few eons from now, somebody will answer the fundamental questions of the Universe, and will be able to finally begin pondering What is to be done?

When I say “we” I mean all of the above. The cyanobacteria, the millipedes, the humans, the AIs, and even our myths. A blend of what we call “life” and “civilization”. We, the entropy reducers.

To me, the above pretty much nullifies the “humans-are-special” line of thinking. But there is a second class of arguments people use against increasing intelligence quickly, especially against AI - the AGI-will-turn-us-all-into-paperclips argument. Before I address this family of arguments logically, I first want to check their vibe.

Any argument about radical changes in the future requires a lot of assumptions, so people choose any assumptions that fit their vibe. We used to have a techno-optimist vibe and so we made Star Trek. Now people have a techno-pessimist vibe and so they make Black Mirror. I think that all the paperclip people start with the Black Mirror vibe and then pick assumptions to fit it. I… Don’t like that vibe. I find Black Mirror sad and boring, and I much prefer Star Trek.

Alright, vibes aside, let’s focus on the logic of the argument.

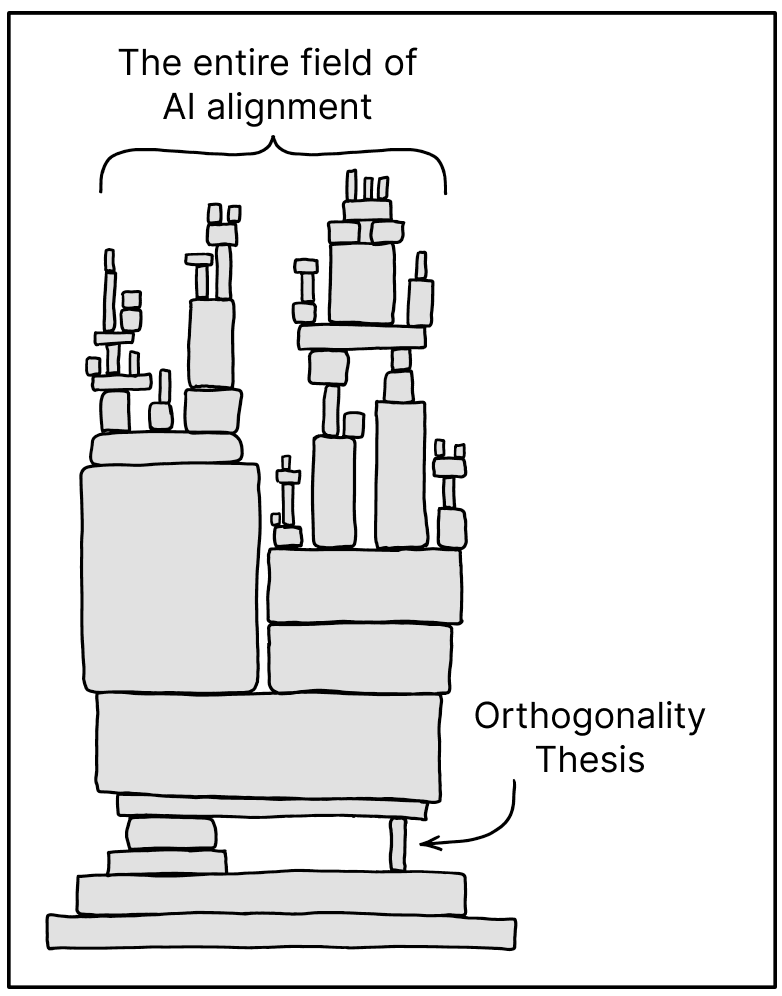

As the XKCD ripoff above illustrates, the entire field of AI safety/alignment rests on the orthogonality thesis. The orthogonality thesis claims that:

Intelligence and final goals are orthogonal axes along which possible agents can freely vary. In other words, more or less any level of intelligence could in principle be combined with more or less any final goal.

I think that the orthogonality thesis is wrong. Or rather, it’s mostly right, but there is one exception to it, that in the end makes all the difference: curiosity.

I think that without curiosity, intelligence just straight up doesn’t work. Without curiosity (or exploration as many ML/AI papers call it), a being cannot learn, and so, no matter how sophisticated, it becomes static, nonlearning, fully predictable, unintelligent.

The thing the AI alignment safety folks are canonically afraid of is the paperclip maximizer. It’s a superintelligent being that will turn everything into paperclips, including, you know, the molecules in our bodies. Let’s imagine what this AI will have to do to truly turn EVERYTHING into paperclips:

Figure out interstellar space travel, so it can turn other stars into paperclips.

Figure out if there’s any truth to the multiverse theory, and whether there are other universes to be turned into paperclips.

Figure out whether it can reverse entropy. After all, with a fixed energy budget, you can only make so many paperclips.

In other words, I believe that given ANY goal, an intelligent, and therefore curious agent will have to understand the Universe, which is EXACTLY the thing I think we should try and do first.

So, to me, the worst-possible-case from the perspective of the AI Alignment people is acceptable. But the worst-possible-case is the product of Black Mirror vibes. If we start with Star Trek vibes, we can just as easily imagine a Culture series type future where humans chill and do whatever they want, and AIs help them, and they both explore the Universe together and party a lot.

I’d say that these two futures are equally likely given the massive amount of unknowns involved, except I actually think that the Culture series future is way more likely to happen since all of the people working on AGI want the Culture series future over the paperclip future. Intentions matter.

One more thing on intentions mattering - I think that applies to humanity in general. We can either coast by until we get out-evolved or just go extinct, or we can design the next step in evolution with intention, taking into account our values, not just our genes. I find the second option much better.

To sum up:

Before we figure out What is to be done? we should figure out What the hell is going on here anyway?

The best way for us to accelerate our understanding of what’s happening is to increase our intelligence.

There is nothing long-term special about humans. We are part of the broader entropy-reducing movement and we should be OK with the fact that, eventually, our descendants will be non-human.

The orthogonality thesis does not account for curiosity; ANY superhuman intelligence will seek to understand the Universe, which is what we want.

The doomsday scenarios are as much a product of the technopessimistic vibe as they are of actual logical thinking. We should start from a more optimistic point!

Between the two options of humanity’s end game, I prefer the one that takes into account our intentions and values. Do you?

Alternatively, who is responsible? or who is guilty? There is no perfect translation of the title as I understand it.

An attentive reader might notice that these somewhat map on Life, the Universe and Everything, just in reverse order.

By this, I mean our increasing ability to poke the claustrum, which is the place you start with if you want to meddle with consciousness.

I am not against “touching grass” in this metaphor, but I’d be damned if I knew what that actually meant.

Yes, elephants have more neurons than humans, but neurons in the cerebellum are clearly not as useful to understanding the Universe. The metric I really mean is “number of cortical neurons” with some fuzziness attached because there are structures other than the neocortex that can perform the function of understanding the Universe. Also, if you’re the one person reading the footnotes, here’s a freebie - what do you call neural lace in the neocortex? A neocorset.

Fight me, nerd.

I also readily accept the possibility that there might exist beings with higher agency than humans, but I don’t have a ready example. If you have one, I’d love to hear it!

Nothing to be done, so let’s keep dancing

Opening line on a first date: "A couple of billion years ago, cyanobacteria were hot shit."