Towards Acting AI

Mr. Gorbachev, Open This Box

There was a magical moment in the history of Large Language Models - the release of GPT-3. The technology went from babbling complete nonsense to being able to talk fairly reasonably and solve a wide variety of problems. It was magical. Especially cool was the fact that all it took (not to minimize the herculean task, talking strictly about fundamental technology here) was taking an existing model and SUPERSIZING it1.

That got a lot of people (including yours truly) very excited. People started thinking, well, if we went from nonsense to sense by SUPERSIZING… What will happen if we… MEGASIZED the SUPERSIZED model? Now GPT-4 is out, and we have an answer to that question. GPT-4 has basically achieved human-level intelligence at a wide variety of tasks from medicine to law to math (and more!).

There are some people who think that if we ULTRASIZE the model, we will somehow get to superhuman intelligence. I think that that’s false and that we are basically at or near the limits of LLM scaling. Consider what a transformer model “wants” when it’s training. It wants to predict the next word, to say what humans would have said. Humans say… all kinds of things, a lot of them very much below the fabled “human-level intelligence”.

Now, we’re up against the thorny issue that humans make a lot of mistakes and say all kinds of incorrect (and also mean) things. So, while we have models that CAN predict these things pretty much perfectly, we WANT models that are always right and kind, and helpful. That’s not something you can achieve by just mimicking humans.

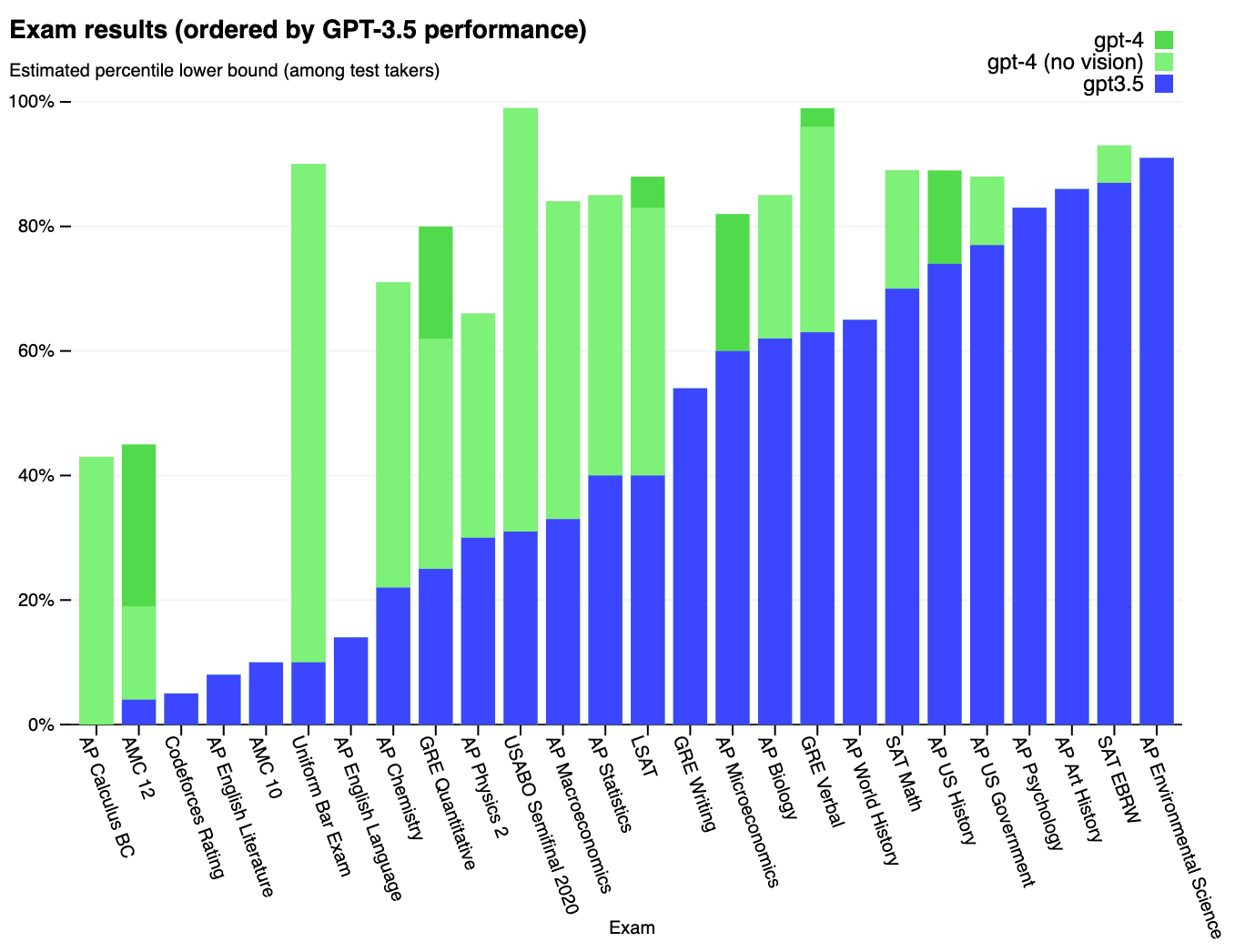

So we use a technology called RLHF - we ask humans to rate the things LLMs say as “helpful” or “not helpful” and the models learn our preferences. That has made them nicer, but it comes at a cost. Apparently, when LLMs learn to tell us what we want to hear, they get worse at things like economics and calculus:

Oops.

If you ask me, we’ve run into the scaling limits of LLMs. GPT-4 already has little new emergent functionality compared to GPT-3. It can mimic humans near-perfectly. Now, if we want LLMs to do something other than just mimicking humans, we have to accurately describe that something, which is a very hard human alignment challenge that cannot be solved by adding more parameters/data.

In terms of, for example, scientific discovery, we want our LLM to mimic not the average human, but John von Neumann. That means that instead of minimal-wage Mechanical Turk workers and random ChatGPT users, we need an army of John von Neumanns to train the next model through RLHF. That’s tricky. We don’t even have one John von Neumann at the moment2.

Luckily, we’ve found out something really cool about LLMs. They can… Do things! Or at least plan to do things, and then we can translate those plans into actions using code. A great example is SayCan. A bunch of folks at Google took an LLM, and attached a robot to it, and it turned out that the robot can now listen to human instructions and fairly often complete them. The way it works is when a human gives the robot a task, for example, “go slay that dragon”3, the LLM generates a bunch of intermediate actions and rates them on how likely those actions are to complete the goal, and how feasible the model considers their execution.

So the LLM might generate suggestions like “get a sword” or “shoot laser beams from my frikkin eyes” and then evaluate them like “oh, there is a conveniently located stone with a sword sticking out of it, I might be able to pull it out and slay the dragon” and “laser beams would certainly mess up that dragon, no doubt about it, but I’ve never been able to shoot them out of my frikkin eyes, and I don’t know if I can master that in the context of battling a dragon”. And then it does some math and tells its robot body to go for the sword.

That is a really encouraging thing for robotics, and I expect us to get to General-Purpose Robots fairly quickly, but real-world stuff still requires a lot of time and capital. The ability of LLMs to do stuff is not limited to the real-world though. They can also do stuff in the digital realm. A few teams have shown that LLMs can learn by themselves when to use tools like a calculator, web search, translation engine, etc. to answer people’s questions. And now OpenAI is taking this concept to the next level by allowing people to hook up their own tools to ChatGPT through a plugin system.

That’s really cool, but the output of that is still mostly text. ChatGPT will be able to send some POST requests with user approval, but it won’t be able to go wild on just doing things left and right with good intentions. The thing about POST requests is that we already have buttons for most if not all of them that are fairly easy to use - do I really need a superintelligent chatbot to book a hotel on Expedia instead of just doing it through the Expedia app? I don’t think so.

I’m more interested in AI models that can do a wide range of things. By far the most interesting player in that space is a startup called Adept.ai that just conveniently raised $350M. It is making a tool that can look at your browser tab and accomplish instructions given via text. Here’s a demo of their tool finding a refrigerator on Craigslist and composing an email to the lister:

This is REALLY close to being extremely useful. If, for example, I wanted to see an ophthalmologist in Singapore, and an AI tool could automatically

Search for all ophthalmologists in Singapore and get their locations/ratings/education etc.

Email them for appointment availability.

Either scrape their prices from their websites or email the offices and ask them for their exam pricing.

Put all of that information in a Google Sheet for me to look at and make the appointment with the doctor I pick.

That would be really damn useful. Pretty close to Virtual Executive Assistant level. Adept is not there yet, but they are on the right track. The big limiting factor is input length. The transformer architecture that we use for almost all LLMs has quadratic scaling with respect to input length. Meaning that doubling the size of your inputs quadruples the amount of compute you need to spend on training the model. That’s more or less fine for text - GPT-4 has an 8K token context window, which is fine for most tasks. But as soon as we start getting into rich data on the web, the amount of information we might need starts rising very quickly. Luckily, the Adept team understands the problem better than just about anyone else. Two of the authors of the Attention is All You Need paper that introduced the transformer architecture are Adept cofounders. So the company is already working on making attention (in particular FlashAttention, which focuses on long context windows) faster. So I will be watching this company with great interest.

But even Adept falls short of the most ambitious thing that’s most likely achievable mark. That would be having LLM have access to the full functionality of a computer - programming environment, file system, network access, all of the applications, etc. and learning to use all of it in achieving users’ goals. It’s really, really difficult. How do you compress the state of a computer, which is measured in gigabytes into a context window that LLMs can work with?

I think it’s possible. Humans have direct access to much less information than the full state of a computer and are able to successfully use them nevertheless. It seems to me that we should be able to achieve great success by giving the LLM several tiers of memory.

Tier 1 would have access to the task, the large-level tools available to the LLM (applications, repos, etc.), and a text file with the biggest plan/log of what it’s doing.

Tier 2 would have indices of all of the individual-level tools like files, browser or terminal tabs as well as their natural-level descriptions, e.g.

cat.jpg is an image of my cat

budget.xls is my budget for the year

ai_book_draft.doc is the draft of my book on AI supremacy

terminal_window_1 has the output of my current take_over_world.py script

And then Tier 3 would have the contents of a file/browser tab or at least as much of them as fit into the context window. My guess is that the LLM will learn how to “compress” the information that doesn’t fit into the context window into the lower memory tiers at a lower resolution. In other words, if an LLM has already written down in its log that it has written an email and saved it to a file email.txt, it doesn’t need to keep the contents of the email in its context window when it’s working on an emailing python script.

Obviously, the devil is in the details, and making something like this is insanely hard.

But I think that this AI Assistant with Full Computer Access concept is the most ambitious and technically feasible AI project one can work on right now. We know that LLMs can power it in principle. It doesn’t involve real-world training, so it is not slowed down by the tedious slowness of meatspace. It’s the most general case of an AI assistant one can imagine in the digital world.

If you ask me, it’s solid proof that God is American.

If you’re an AI startup, consider hiring all of the geniuses in the world.

Except since it’s Google they mostly worked on getting snacks from their well-stocked kitchens.

I really wanted to make a communism joke in the bit about human preferences and economics but I managed to restrain myself.

Great post Sergey!